참고 블로그

https://waspro.tistory.com/762

https://www.elastic.co/guide/en/cloud-on-k8s/master/k8s-deploy-eck.html

EFK 구성

1. ElasticSearch 구성

namespace 생성

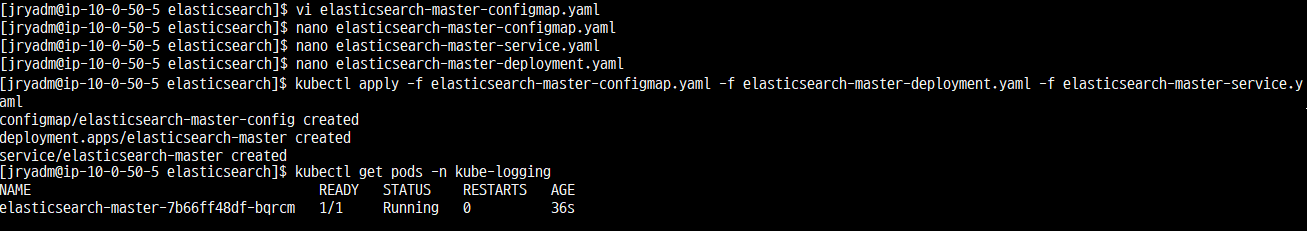

마스터 노드 생성

# elasticsearch-master-configmap.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: kube-logging

name: elasticsearch-master-config

labels:

app: elasticsearch

role: master

data:

elasticsearch.yml: |-

cluster.name: ${CLUSTER_NAME}

node.name: ${NODE_NAME}

discovery.seed_hosts: ${NODE_LIST}

cluster.initial_master_nodes: ${MASTER_NODES}

network.host: 0.0.0.0

node:

master: true

data: false

ingest: false

xpack.security.enabled: true

xpack.monitoring.collection.enabled: true

---

# elasticsearch-master-service.yaml

---

apiVersion: v1

kind: Service

metadata:

namespace: kube-logging

name: elasticsearch-master

labels:

app: elasticsearch

role: master

spec:

ports:

- port: 9300

name: transport

selector:

app: elasticsearch

role: master

---

# elasticsearch-master-deployment.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: kube-logging

name: elasticsearch-master

labels:

app: elasticsearch

role: master

spec:

replicas: 1

selector:

matchLabels:

app: elasticsearch

role: master

template:

metadata:

labels:

app: elasticsearch

role: master

spec:

containers:

- name: elasticsearch-master

image: docker.elastic.co/elasticsearch/elasticsearch:7.3.0

env:

- name: CLUSTER_NAME

value: elasticsearch

- name: NODE_NAME

value: elasticsearch-master

- name: NODE_LIST

value: elasticsearch-master,elasticsearch-data,elasticsearch-client

- name: MASTER_NODES

value: elasticsearch-master

- name: "ES_JAVA_OPTS"

value: "-Xms256m -Xmx256m"

ports:

- containerPort: 9300

name: transport

volumeMounts:

- name: config

mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

readOnly: true

subPath: elasticsearch.yml

- name: storage

mountPath: /data

volumes:

- name: config

configMap:

name: elasticsearch-master-config

- name: "storage"

emptyDir:

medium: ""

initContainers:

- name: increase-vm-max-map

image: busybox

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

---

데이터 노드 생성

# elasticsearch-data-configmap.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: kube-logging

name: elasticsearch-data-config

labels:

app: elasticsearch

role: data

data:

elasticsearch.yml: |-

cluster.name: ${CLUSTER_NAME}

node.name: ${NODE_NAME}

discovery.seed_hosts: ${NODE_LIST}

cluster.initial_master_nodes: ${MASTER_NODES}

network.host: 0.0.0.0

node:

master: false

data: true

ingest: false

xpack.security.enabled: true

xpack.monitoring.collection.enabled: true

---

# elasticsearch-data-service.yaml

---

apiVersion: v1

kind: Service

metadata:

namespace: kube-logging

name: elasticsearch-data

labels:

app: elasticsearch

role: data

spec:

ports:

- port: 9300

name: transport

selector:

app: elasticsearch

role: data

---

# elasticsearch-data-statefulset.yaml

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: kube-logging

name: elasticsearch-data

labels:

app: elasticsearch

role: data

spec:

serviceName: "elasticsearch-data"

selector:

matchLabels:

app: elasticsearch-data

role: data

replicas: 1

template:

metadata:

labels:

app: elasticsearch-data

role: data

spec:

containers:

- name: elasticsearch-data

image: docker.elastic.co/elasticsearch/elasticsearch:7.3.0

env:

- name: CLUSTER_NAME

value: elasticsearch

- name: NODE_NAME

value: elasticsearch-data

- name: NODE_LIST

value: elasticsearch-master,elasticsearch-data,elasticsearch-client

- name: MASTER_NODES

value: elasticsearch-master

- name: "ES_JAVA_OPTS"

value: "-Xms300m -Xmx300m"

ports:

- containerPort: 9300

name: transport

volumeMounts:

- name: config

mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

readOnly: true

subPath: elasticsearch.yml

- name: elasticsearch-data-persistent-storage

mountPath: /data/db

volumes:

- name: config

configMap:

name: elasticsearch-data-config

initContainers:

- name: increase-vm-max-map

image: busybox

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

volumeClaimTemplates:

- metadata:

name: elasticsearch-data-persistent-storage

annotations:

volume.beta.kubernetes.io/storage-class: "gp2"

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: standard

resources:

requests:

storage: 10Gi

---

data-pv 생성

apiVersion: v1

kind: PersistentVolume

metadata:

name: elasticsearch-data-persistent-volume-0

namespace: kube-logging

labels:

app: elasticsearch-data

role: data

spec:

claimRef:

apiVersion: v1

kind: PersistentVolumeClaim

name: elasticsearch-data-persistent-storage-elasticsearch-data-0

namespace: kube-logging

storageClassName: gp2

capacity:

storage: 10Gi

volumeMode: Filesystem

persistentVolumeReclaimPolicy: Recycle

accessModes:

- ReadWriteOnce

hostPath:

path: "/var/log/elasticsearch-data-0"

정상적으로 pv와 pvc 가 바인딩된 모습을 확인할 수 있다.

pv와 pvc가 바인딩되면서 Pending 상태였던 elasticsearch-data-0 (POD)가 Running 된다.

클라이언트 노드 생성

# elasticsearch-client-configmap.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: kube-logging

name: elasticsearch-client-config

labels:

app: elasticsearch

role: client

data:

elasticsearch.yml: |-

cluster.name: ${CLUSTER_NAME}

node.name: ${NODE_NAME}

discovery.seed_hosts: ${NODE_LIST}

cluster.initial_master_nodes: ${MASTER_NODES}

network.host: 0.0.0.0

node:

master: false

data: false

ingest: true

xpack.security.enabled: true

xpack.monitoring.collection.enabled: true

---

# elasticsearch-client-service.yaml

---

apiVersion: v1

kind: Service

metadata:

namespace: kube-logging

name: elasticsearch-client

labels:

app: elasticsearch

role: client

spec:

ports:

- port: 9200

name: client

- port: 9300

name: transport

selector:

app: elasticsearch

role: client

---

# elasticsearch-client-deployment.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: kube-logging

name: elasticsearch-client

labels:

app: elasticsearch

role: client

spec:

replicas: 1

selector:

matchLabels:

app: elasticsearch

role: client

template:

metadata:

labels:

app: elasticsearch

role: client

spec:

containers:

- name: elasticsearch-client

image: docker.elastic.co/elasticsearch/elasticsearch:7.3.0

env:

- name: CLUSTER_NAME

value: elasticsearch

- name: NODE_NAME

value: elasticsearch-client

- name: NODE_LIST

value: elasticsearch-master,elasticsearch-data,elasticsearch-client

- name: MASTER_NODES

value: elasticsearch-master

- name: "ES_JAVA_OPTS"

value: "-Xms256m -Xmx256m"

ports:

- containerPort: 9200

name: client

- containerPort: 9300

name: transport

volumeMounts:

- name: config

mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

readOnly: true

subPath: elasticsearch.yml

- name: storage

mountPath: /data

volumes:

- name: config

configMap:

name: elasticsearch-client-config

- name: "storage"

emptyDir:

medium: ""

initContainers:

- name: increase-vm-max-map

image: busybox

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

---

구축 상태 확인

노드 설치가 완료되면, master node에 아래와 같은 문구가 출력되는지 확인한다.

X-Pack 적용

# 비밀번호 초기화

kubectl exec -it $(kubectl get pods -n kube-logging | grep elasticsearch-client | sed -n 1p | awk '{print $1}') -n kube-logging -- bin/elasticsearch-setup-passwords auto -b

Changed password for user apm_system

PASSWORD apm_system = 9nLUMUr6h7GncagNEECJ

Changed password for user kibana

PASSWORD kibana = 8wVAKEAu3vfbTD4f20qr

Changed password for user logstash_system

PASSWORD logstash_system = XKx2KLSFiUrBWtHlzWKh

Changed password for user beats_system

PASSWORD beats_system = VPCeDH0bS9NWPOIYa3CF

Changed password for user remote_monitoring_user

PASSWORD remote_monitoring_user = SWzxyRdpBLuEUOkm7lHb

Changed password for user elastic

PASSWORD elastic = 1l5v5JeYCLvvGb5BsvV1

X-Pack은 보안, 알림, 모니터링, 보고, 그래프 기능을 설치하기 편리한 단일 패키지로 번들 구성한 Elastic Stack 확장 프로그램이다. X-Pack 구성 요소는 서로 원활하게 연동할 수 있도록 설계되었지만 사용할 기능을 손쉽게 활성화하거나 비활성화할 수 있다. 클러스터 보안을 위해 X-Pack 보안 모듈을 활성화했고(각 노드 별 configmap 확인), 암호를 초기화한다.

시크릿 키 생성

# 시크릿 키 생성

kubectl create secret generic elasticsearch-pw-elastic -n kube-logging --from-literal password=1l5v5JeYCLvvGb5BsvV1

2. Kibana 구성

# kibana-configmap.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: kube-logging

name: kibana-config

labels:

app: kibana

data:

kibana.yml: |-

server.host: 0.0.0.0

elasticsearch:

hosts: ${ELASTICSEARCH_HOSTS}

username: ${ELASTICSEARCH_USER}

password: ${ELASTICSEARCH_PASSWORD}

---

# kibana-service.yaml

---

apiVersion: v1

kind: Service

metadata:

namespace: kube-logging

name: kibana

labels:

app: kibana

spec:

type: LoadBalancer

ports:

- port: 80

name: webinterface

targetPort: 5601

selector:

app: kibana

---

# kibana-deployment.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: kube-logging

name: kibana

labels:

app: kibana

spec:

replicas: 1

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:7.3.0

ports:

- containerPort: 5601

name: webinterface

env:

- name: ELASTICSEARCH_HOSTS

value: "http://elasticsearch-client.kube-logging.svc.cluster.local:9200"

- name: ELASTICSEARCH_USER

value: "elastic"

- name: ELASTICSEARCH_PASSWORD

valueFrom:

secretKeyRef:

name: elasticsearch-pw-elastic

key: password

volumeMounts:

- name: config

mountPath: /usr/share/kibana/config/kibana.yml

readOnly: true

subPath: kibana.yml

volumes:

- name: config

configMap:

name: kibana-config

---

Kibana Deployment의 Secret 생성

# Secret 생성

$ kubectl create secret generic elasticsearch-pw-elastic -n kube-logging --from-literal password=aaaaaaaaaaaaaaaa

# Secret 확인

$ kubectl get secrets -n kube-logging

NAME TYPE DATA AGE

elasticsearch-pw-elastic Opaque 1 18s

정상적으로 Kibana의 Pod 및 Deployment가 Running 됨을 확인할 수 있다.

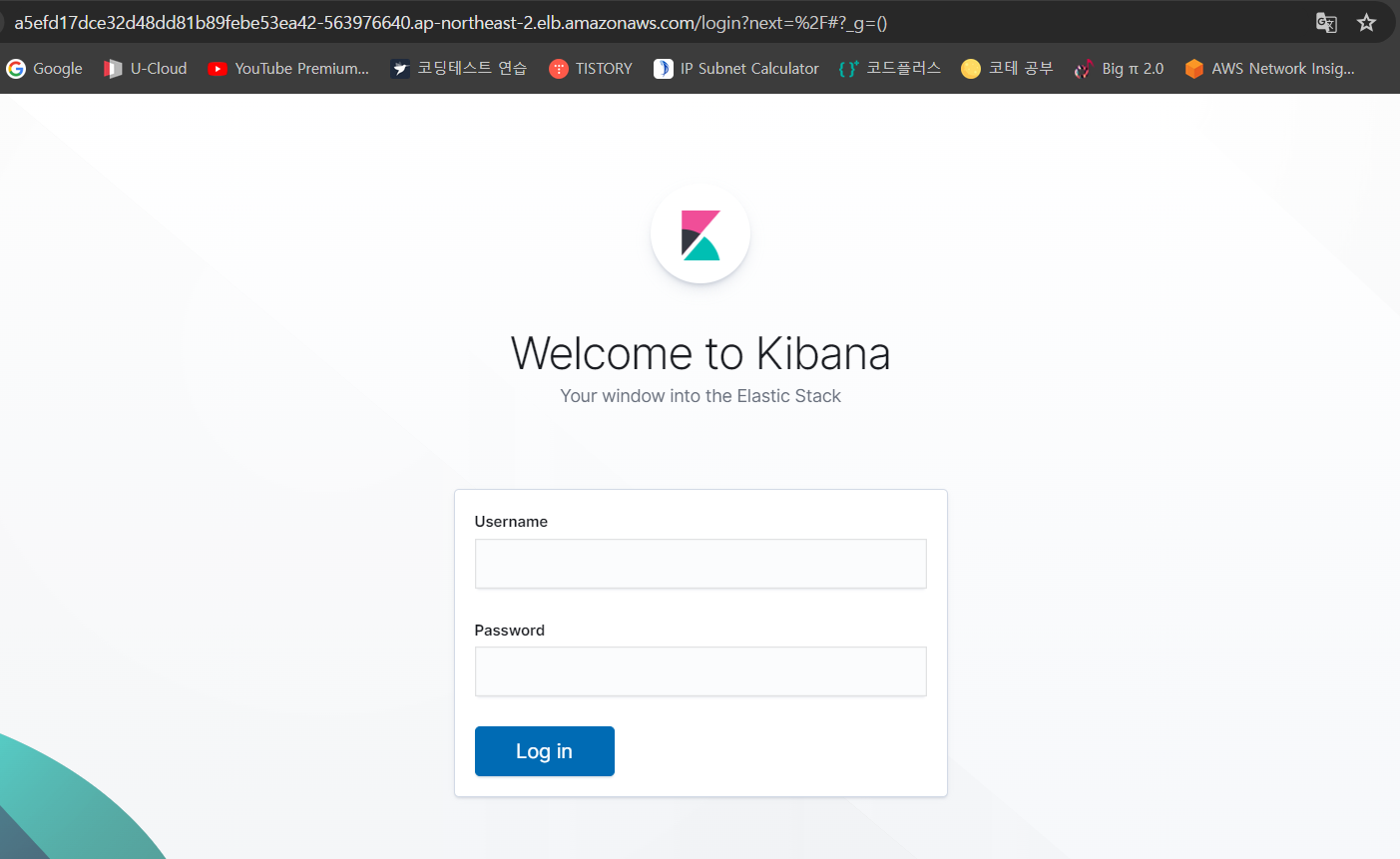

Kibana 대시보드 확인

url창에 kibana(Service) 로드밸런서 url을 넣으면 정상적으로 Kibana가 구동되어 대시보드가 뜬 것을 확인할 수 있다.

id는 elastic, password는 앞서 초기화 한 암호()를 입력하면 된다.

현재 시점에는 Fluentd가 설치되어 있지 않아 수집이 진행되고 있는 상태는 아니며, 기본 환경에 대해 확인 후 Fluentd 설치를 진행해 보도록 하자.

Kibana 사용자 확인

Management(왼쪽 톱니바퀴) > Security > Users이다.

User 정보를 수정하거나, Create User를 통해 신규 유저를 할당할 수 있다. 특히 사전에 정의되어 있는 27개의 Role을 기반으로 사용자를 추가할 수 있다.

User Role : https://www.elastic.co/guide/en/elasticsearch/reference/current/built-in-roles.html

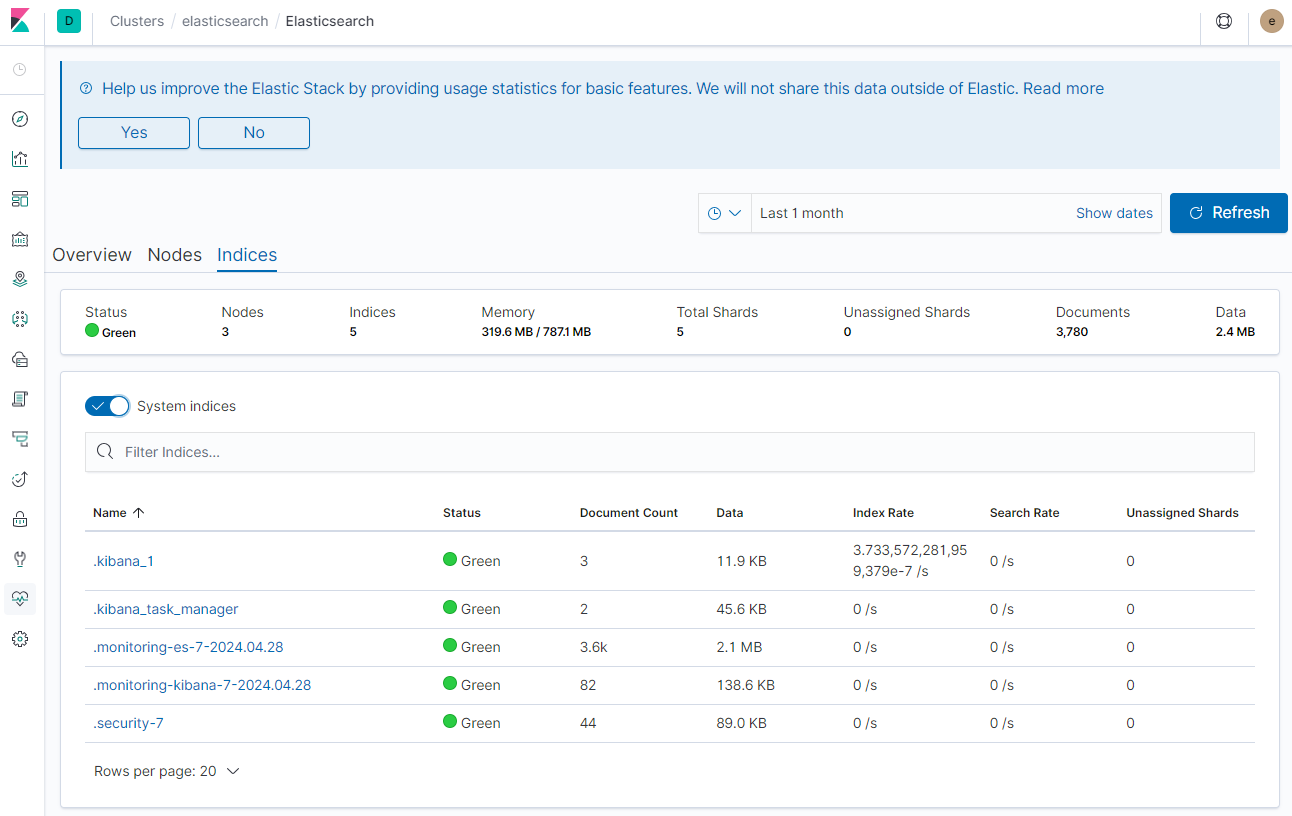

Kibana 모니터링 확인

Management 위의 Monitoring 이다.

모니터링 화면에서는 ElasticSearch와 Kibana 관련 정보를 확인할 수 있다. 먼저 ElasticSearch에서는 Resource 정보와 Node, Indice, Log 정보를 다음과 같이 각각 확인 가능하다.

Elasticsearch 모니터링

Overview (리소스 정보)

Nodes (노드 정보)

Nodes 정보에서는 ElasticSearch 노드에 대해 확인할 수 있다. 이 중 앞에 별표가 붙어 있는 노드가 Master 노드이다.

Indices (통계 지표)

Kibana 항목에서는 Client Request, Response Time, Instance 정보를 확인할 수 있다.

Kibana 모니터링

Overview

Instances

Index patterns

3. Fluentd 구성

# fluentd-config-map.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: fluentd-config

namespace: kube-logging

data:

fluent.conf: |

<match fluent.**>

# this tells fluentd to not output its log on stdout

@type null

</match>

# here we read the logs from Docker's containers and parse them

<source>

@type tail

path /var/log/containers/*.log

pos_file /var/log/app.log.pos

tag kubernetes.*

read_from_head true

<parse>

@type json

time_format %Y-%m-%dT%H:%M:%S.%NZ

</parse>

</source>

# we use kubernetes metadata plugin to add metadatas to the log

<filter kubernetes.**>

@type kubernetes_metadata

</filter>

# we send the logs to Elasticsearch

<match **>

@type elasticsearch_dynamic

@log_level info

include_tag_key true

host "#{ENV['FLUENT_ELASTICSEARCH_HOST']}"

port "#{ENV['FLUENT_ELASTICSEARCH_PORT']}"

user "#{ENV['FLUENT_ELASTICSEARCH_USER']}"

password "#{ENV['FLUENT_ELASTICSEARCH_PASSWORD']}"

scheme "#{ENV['FLUENT_ELASTICSEARCH_SCHEME'] || 'http'}"

ssl_verify "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERIFY'] || 'true'}"

reload_connections true

logstash_format true

logstash_prefix logstash

<buffer>

@type file

path /var/log/fluentd-buffers/kubernetes.system.buffer

flush_mode interval

retry_type exponential_backoff

flush_thread_count 2

flush_interval 5s

retry_forever true

retry_max_interval 30

chunk_limit_size 2M

queue_limit_length 32

overflow_action block

</buffer>

</match>

# fluentd-daemonset.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd

namespace: kube-logging

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: fluentd

namespace: kube-logging

rules:

- apiGroups:

- ""

resources:

- pods

- namespaces

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd

roleRef:

kind: ClusterRole

name: fluentd

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: fluentd

namespace: kube-logging

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd

namespace: kube-logging

labels:

k8s-app: fluentd-logging

version: v1

kubernetes.io/cluster-service: "true"

spec:

selector:

matchLabels:

k8s-app: fluentd-logging

version: v1

kubernetes.io/cluster-service: "true"

template:

metadata:

labels:

k8s-app: fluentd-logging

version: v1

kubernetes.io/cluster-service: "true"

spec:

serviceAccount: fluentd # if RBAC is enabled

serviceAccountName: fluentd # if RBAC is enabled

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: fluentd

image: fluent/fluentd-kubernetes-daemonset:v1.1-debian-elasticsearch

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: "elasticsearch-client.kube-logging.svc.cluster.local"

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

- name: FLUENT_ELASTICSEARCH_SCHEME

value: "http"

- name: FLUENT_ELASTICSEARCH_USER # even if not used they are necessary

value: "elastic"

- name: FLUENT_ELASTICSEARCH_PASSWORD # even if not used they are necessary

valueFrom:

secretKeyRef:

name: elasticsearch-pw-elastic

key: password

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: fluentd-config

mountPath: /fluentd/etc # path of fluentd config file

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: fluentd-config

configMap:

name: fluentd-config # name of the config map we will create

Fluentd는 Input(source) > Filter(filter) > Buffer(buffer) > Output(match) 순으로 처리된다고 볼 수 있다.

- source : 수집 대상 정의

- source.@type : 수집 방식 정의. tail은 파일을 tail하여 읽어오는 방식

- source.pos_file : 수집 파일의 inode를 추적하기 위한 파일로 마지막 읽은 위치 기록

- source.parse.@type : 전달 받은 데이터를 파싱. json 타입으로 파싱하여 전달. 정규표현식이나 별도의 가공하지 않고 전달할 수 도 있음

- filter : 특정 필드에 대한 필터링 조건을 정의. grep, parser 등을 지정할 수 있으며, kubernetes_metadata를 사용하기 위해 별도의 plugin을 설치하여 구성

- match : 출력할 로그 형태 정의. stdout, forward, file 등을 지정할 수 있으며, elasticsearch_dynamic으로 지정하면, 유동적으로 선언하여 처리할 수 있음

- match.buffer : input 에서 들어온 log를 특정데이터 크기 제한까지 도달하면 output 출력

fluentd 생성 확인

fluentd 로그 수집 확인

FluentD를 커스터마이징 하기 전에 Log 수집여부를 확인해보자

https://waspro.tistory.com/762

https://coding-start.tistory.com/m/383

'AWS > Project' 카테고리의 다른 글

| [CI/CD] CI/CD - Jenkins, GitLab 구성 (0) | 2024.06.19 |

|---|---|

| Route53 ACM 인증서 요청 (0) | 2024.06.13 |

| [K8S 환경에 Kafka 및 EFK 배포] TroubleShooting (0) | 2024.04.19 |

| [K8S 환경에 Kafka 및 EFK 배포] EKS 구성 (0) | 2024.04.18 |

| [K8S 환경에 Kafka 및 EFK 배포] 구성도 (0) | 2024.04.08 |